power on-then-off, so it's better not to inflict it to fragile electronics

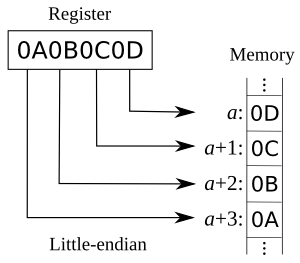

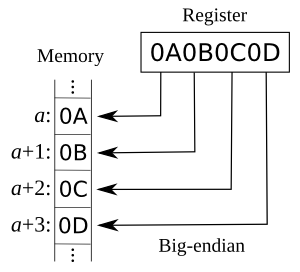

Endianness refers to the convention used to handle multi-byte values :

The Intel x86 processor architecture uses little-endian.

ICH stands for I/O Controller Hub. It manages all ports and buses :

USB cables / connectors are made of 5 wires :

| Level | Usage | Details | Pro's | Con's | ||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | striping |

|

maximal write performance | no data protection | ||||||||||||||||||||||||||||||

| 1 | mirroring |

|

highest-performing RAID level | lower usable capacity | ||||||||||||||||||||||||||||||

| 5 | striping with rotating parity |

|

||||||||||||||||||||||||||||||||

| 6 | striping with rotating double-parity |

|

I used to think RAID 5 and RAID 6 were the best RAID configs to use. It seemed to offer the best bang for buck. However, after seeing how long it took to rebuild an array after a drive failed (over 3 days), I'm much more hesitant to use those RAIDs. I much rather prefer RAID 1+0 even though the overall cost is nearly double that of RAID 5. It's much faster, and there is no rebuild process if the RAID controller is smart enough. You just swap failed drives, and the RAID controller automatically utilizes the backup drive and then mirrors onto the new drive. Just much faster and much less prone to multiple drive failures killing the entire RAID.

The main reason is not only the rebuild time (which indeed is horrible for a loaded system), but also the performance characteristics that can be terrible if you do a lot of writing.

We for example more than doubled the throughput of Cassandra by dropping RAID5. So the saving of 2 disks per server in the end required buying almost twice as many servers. (source)

There is never a case when RAID 5 is the best choice, ever. There are cases where RAID 0 is mathematically proven more reliable than RAID 5. RAID 5 should never be used for anything where you value keeping your data. I am not exaggerating when I say that very often, your data is safer on a single hard drive than it is on a RAID 5 array : the problem is that once a drive fails, during the rebuild, if any of the surviving drives experience an URE, the entire array will fail. On consumer-grade SATA drives that have a URE rate of 1 in 1014, that means if the data on the surviving drives totals 12TB, the probability of the array failing rebuild is close to 100% (1014 is bits. When you divide 1014 by 8 you get 12.5 trillion bytes, or 12.5 TB). Enterprise SAS drives are typically rated 1 URE in 1015, so you improve your chances ten-fold. Still an avoidable risk.

RAID 6 suffers from the same fundamental flaw as RAID 5, but the probability of complete array failure is pushed back one level, making RAID 6 with enterprise SAS drives possibly acceptable in some cases, for now (until hard drive capacities get larger).

I no longer use parity RAID. Always RAID 10. (source)

| ACPI State | Description | Power consumption | Comments |

|---|---|---|---|

| S1 | Standby | Low < 50 Watts (desktop) |

|

| S2 | (not used) | ||

| S3 | "Save to RAM". RAM, USB and PME are powered on. | S3 < S1 < 15 Watts (desktop) |

|

| S4 | "Save to File" = Hibernate | Minimum < 5 Watts (desktop) |

The context is saved to disk. |

| S5 | Computer software-powered off. | The only difference between the S4 and S5 sleeping states is that no context is saved in S5 | |

| S6 | Computer unplugged from power supply. | 0 |